Abstract

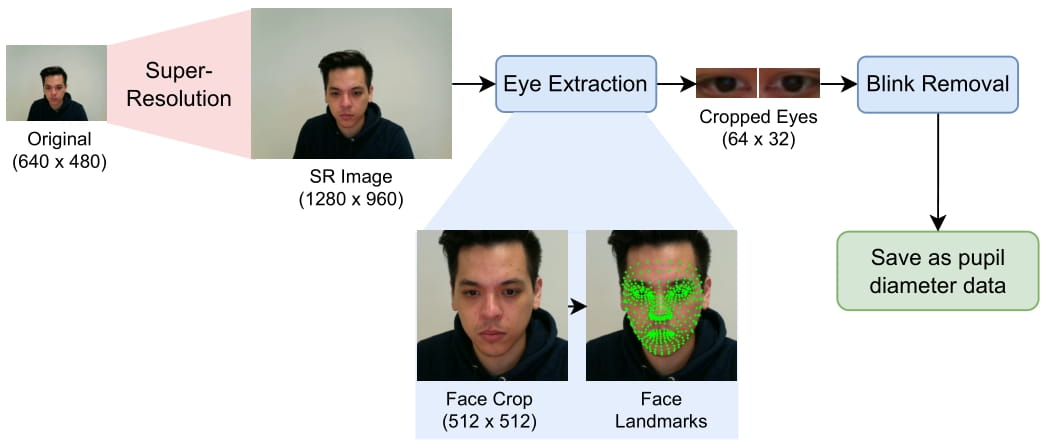

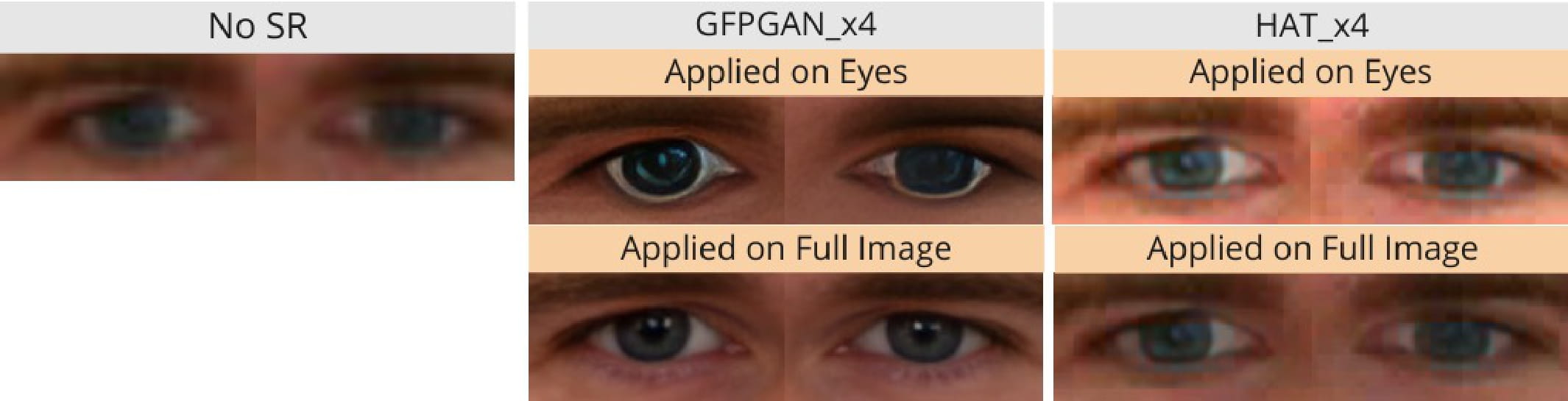

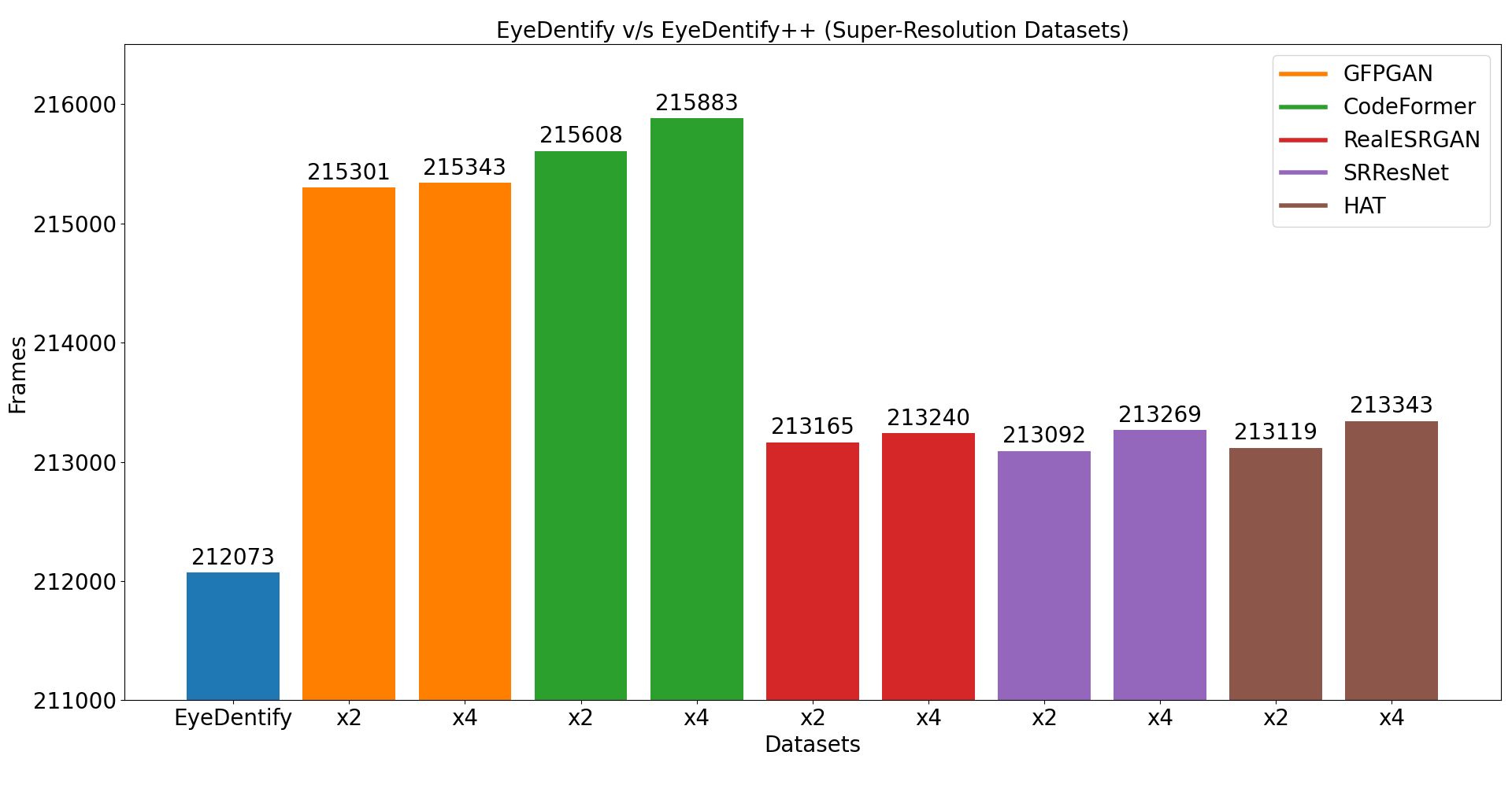

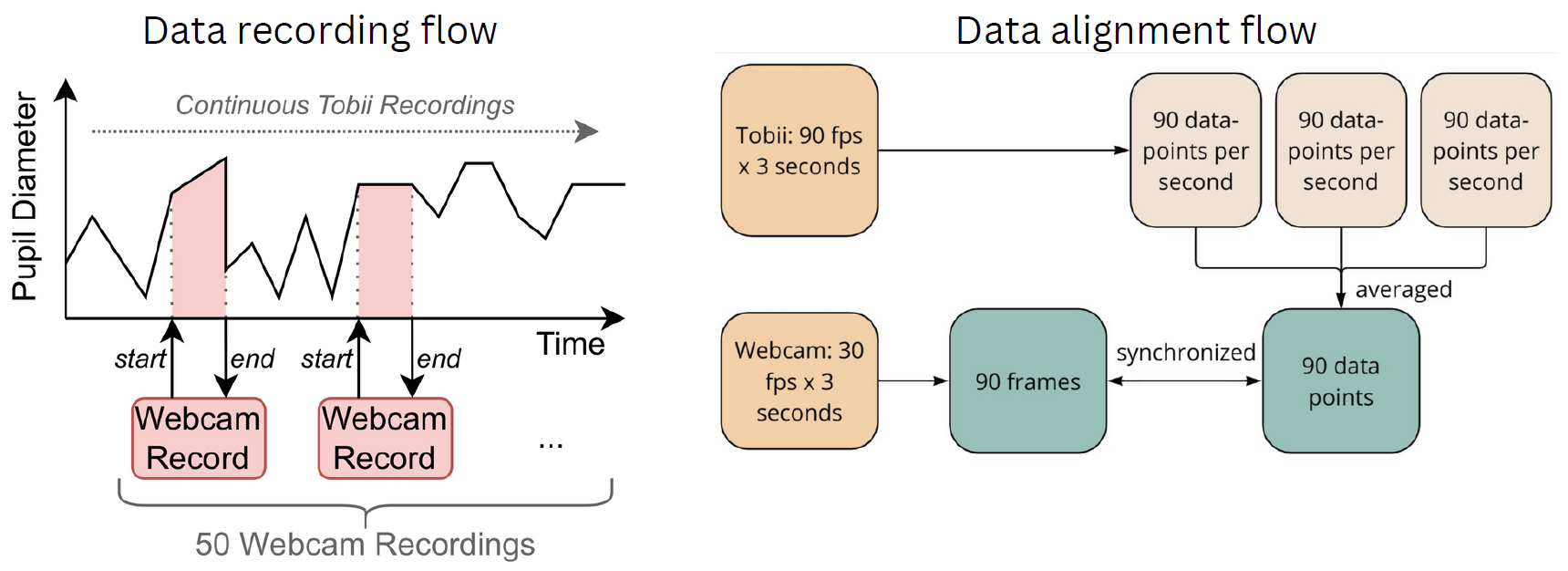

In this work, we introduce EyeDentify, a dataset specifically designed for pupil diameter estimation based on webcam images. EyeDentify addresses the lack of available datasets for pupil diameter estimation, a crucial domain for understanding physiological and psychological states traditionally dominated by highly specialized sensor systems such as Tobii. Unlike these advanced sensor systems and associated costs, webcam images are more commonly found in practice. Yet, deep learning models that can estimate pupil diameters using standard webcam data are scarce. By providing a dataset of cropped eye images alongside corresponding pupil diameter information, EyeDentify enables the development and refinement of models designed specifically for less-equipped environments, democratizing pupil diameter estimation by making it more accessible and broadly applicable, which in turn contributes to multiple domains of understanding human activity and supporting healthcare

Datasets Comparision

| Dataset | Participants | Amount of data [frame] | Public | Gaze Coordinates | Pupil Diameter |

|---|---|---|---|---|---|

| MAEB [1] | 20 | 1,440 | ✗ | ✓ | ✗ |

| MPIIFaceGaze [2] | 15 | 213,659 | ✓ | ✓ | ✗ |

| Dembinsky et al. [3] | 19 | 648,000 | ✓ | ✓ | ✗ |

| Gaze360 [4] | 238 | 172,000 | ✓ | ✓ | ✗ |

| ETH-XGaze [5] | 110 | 1,083,492 | ✓ | ✓ | ✗ |

| VideoGazeSpeech [6] | unknown | 35,231 | ✓ | ✓ | ✗ |

| Ricciuti et al. [7] | 17 | 20,400 | ✗ | ✓ | ✓ |

| Caya et al. [8] | 16 | unknown | ✗ | ✓ | ✓ |

| EyeDentify (ours) | 51 | 212,073 | ✓ | ✓ | ✓ |

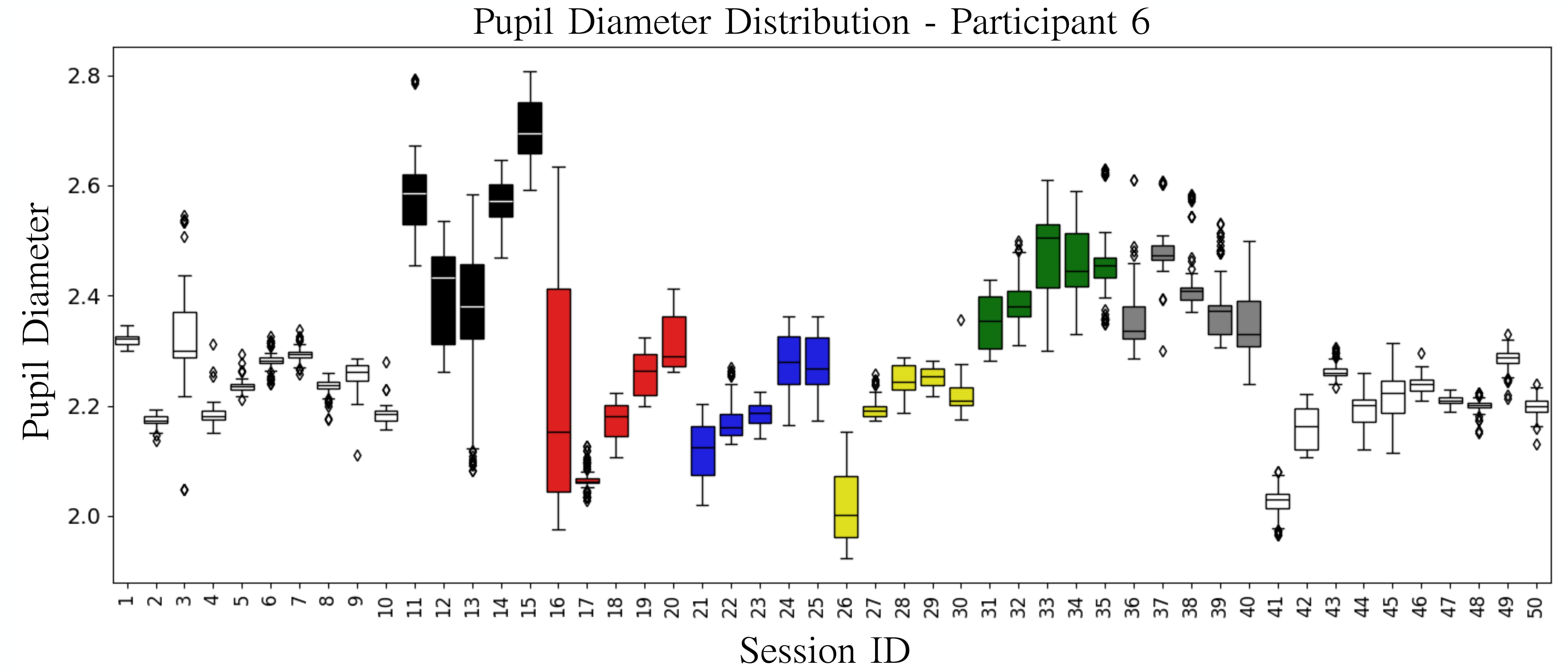

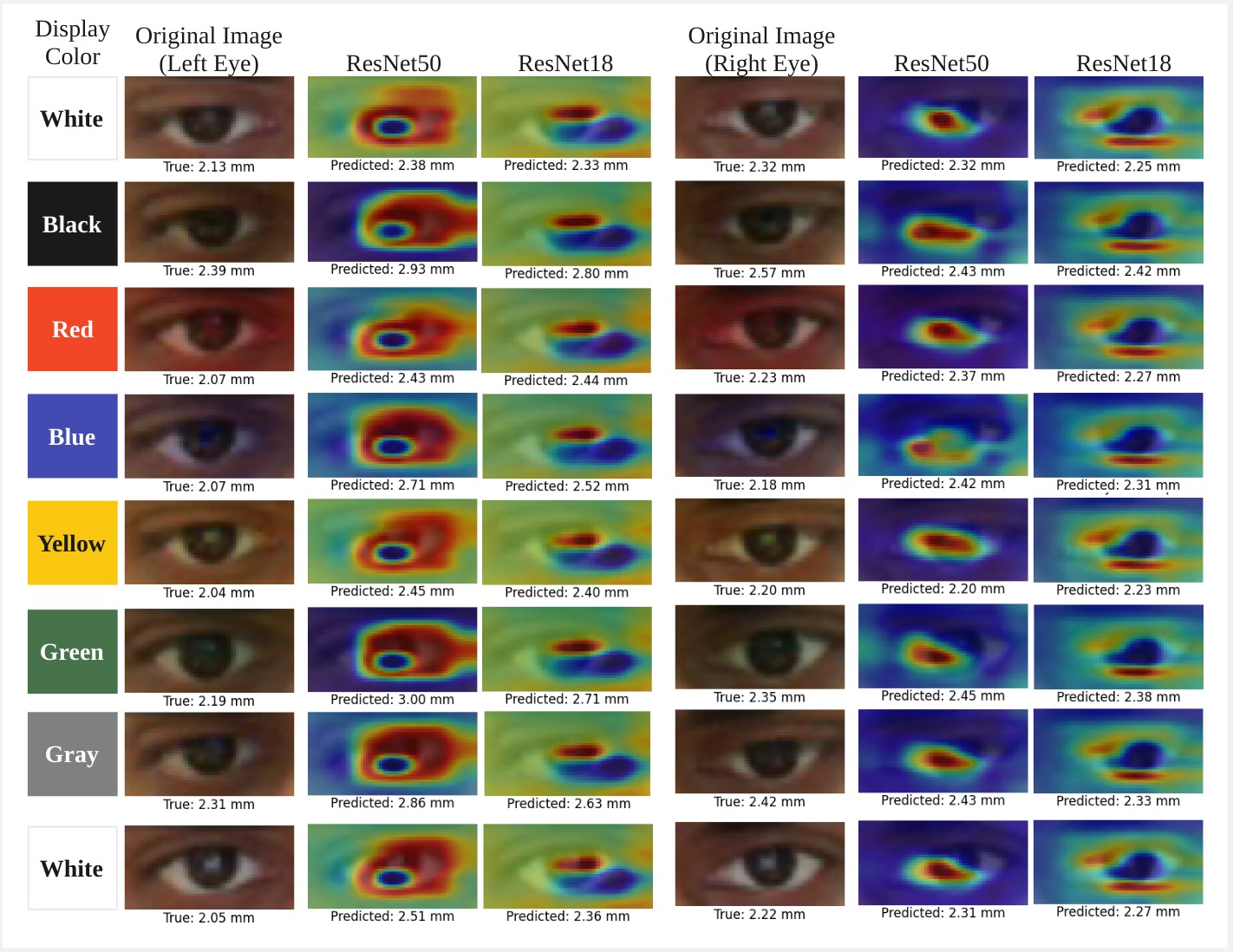

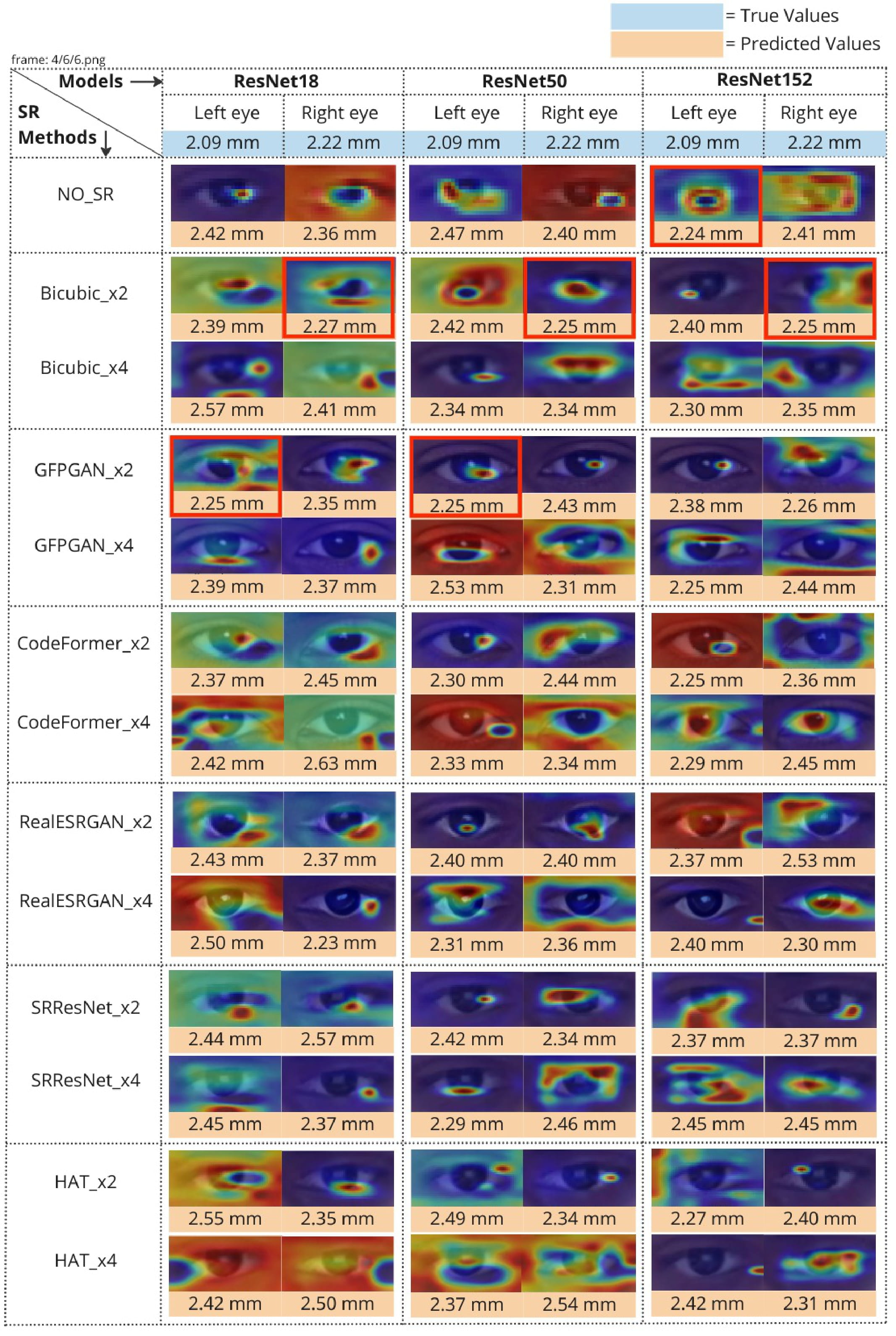

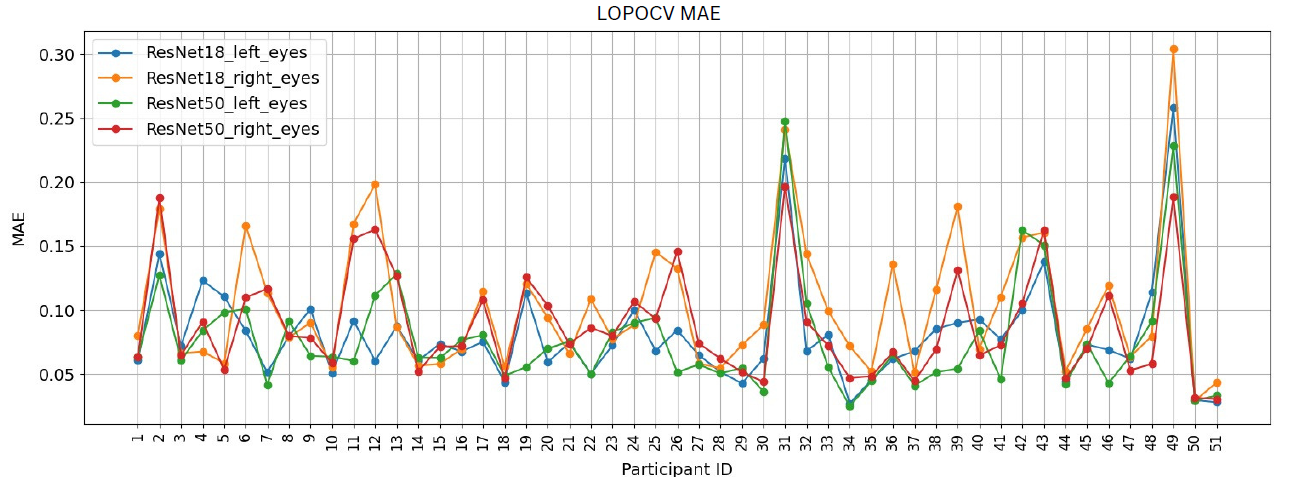

Results

| Eye | Model | Validation MAE ↓ |

Test MAE ↓ |

|---|---|---|---|

| Left | ResNet-18 | 0.0837 ± 0.0135 | 0.1340 ± 0.0196 |

| ResNet-50 | 0.1001 ± 0.0197 | 0.1426 ± 0.0167 | |

| Right | ResNet-18 | 0.1054 ± 0.0173 | 0.1403 ± 0.0328 |

| ResNet-50 | 0.1089 ± 0.0204 | 0.1588 ± 0.0203 |